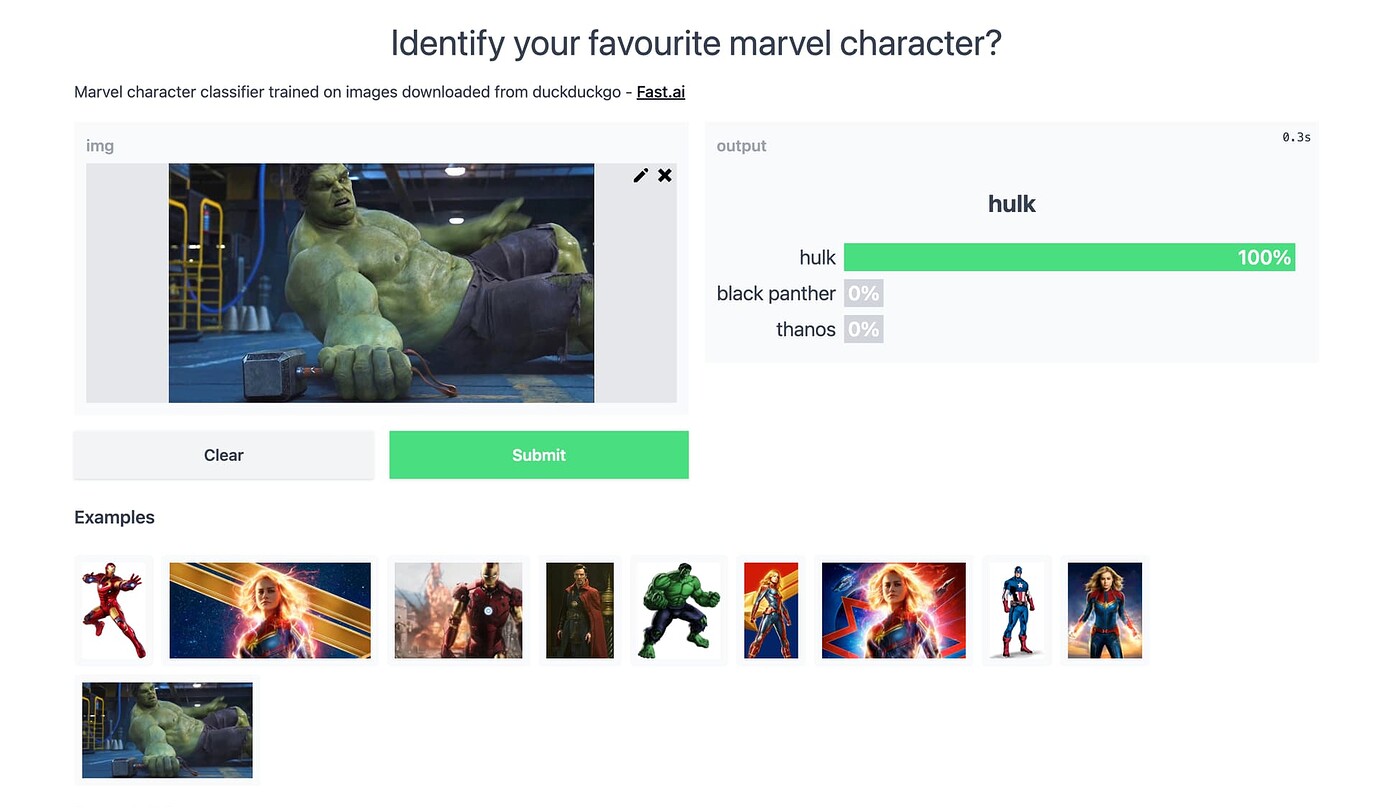

Do you know it is super easy to deploy a Gradio APP on Aceus.com 🤔 In this post we will explore how to deploy a Marvel character classifier app on Aceus.com.

You can play with the app here.

For training the classifier, We use a slightly modified version of Jeremy Howard's kaggle kernel.

To create the Gradio app, all we need is the below code.

from fastai.vision.all import *

path = Path('marvel_characters')

dls = DataBlock(

blocks=(ImageBlock, CategoryBlock),

get_items=get_image_files,

splitter=RandomSplitter(valid_pct=0.2, seed=42),

get_y=parent_label,

item_tfms=[Resize(256)],

batch_tfms=aug_transforms(size=224),

).dataloaders(path)

learn = vision_learner(dls, 'seresnext50_32x4d')

learn.load('marvel_resnext50')

def find_character(img):

character,_,probs = learn.predict(img)

probs = [o.item() for o in probs]

return dict(zip(dls.vocab,probs))

import gradio as gr

demo = gr.Interface(fn=find_character,

inputs=gr.inputs.Image(shape=(256, 256)),

outputs= gr.outputs.Label(num_top_classes=3)

)

if __name__ == "__main__":

demo.launch(server_name="0.0.0.0",server_port=6006)

All we need to do is, create a

- fastai datablock

- fastai learner

- Predict function

- Gradio Interface function

- Launch the app

Lets focus on the last line in the above code, the rest is mostly self explanatory.

demo.launch(server_name="0.0.0.0",server_port=6006)

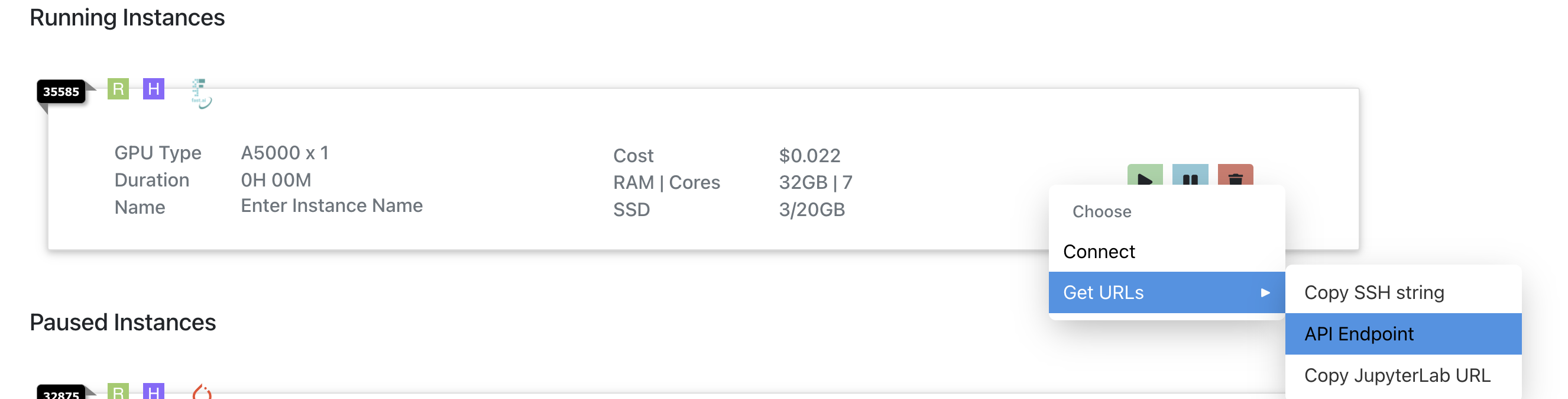

We are asking Gradio to make the app available in the local network and run it on port number 6006. Aceus.com takes care of the rest and

provides you with an url which you can share it with your friends/colleagues. You can access the url by right clicking on your running instance.

If you do not want to run it on a GPU, simply pause and switch the instance to CPU only.

Would love to know what you build using this 😄.

Code

Training notebook.

Inference notebook.